Understanding AI-Generated Election Disinformation

Artificial Intelligence (AI) has significantly infiltrated the political landscape, most notably disseminating election disinformation. This phenomenon involves the creation and propagation of seemingly credible, yet erroneous information intentionally designed to deceive or mislead the electorate. Sophisticated AI techniques, such as deep learning algorithms, create strikingly realistic false narratives or representations, commonly called ‘deepfakes’.

The proliferation of social media has led to a considerable increase in AI-generated election disinformation, significantly impacting voters' decision-making process. AI disinformation is deftly engineered to influence voter perceptions and behaviors, thus distorting the democratic process. Given the potential impact of such malicious activities, it becomes essential to identify and understand AI-generated election disinformation, thus enabling individuals, social media platforms, and governments to counteract and prevent future occurrences effectively.

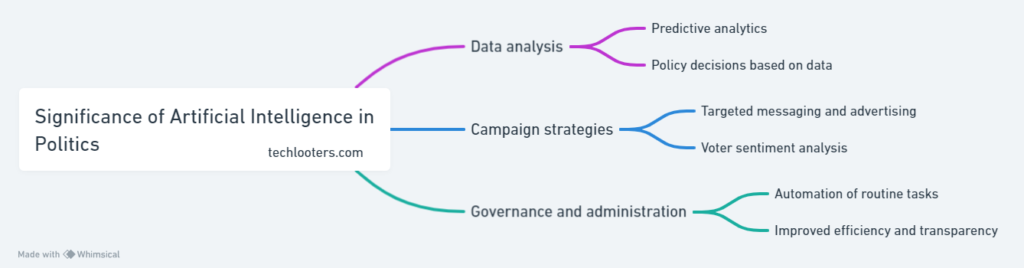

Significance of Artificial Intelligence in Politics

As the digital landscape continues to evolve, so too does the toolbox that political campaigners have at their disposal. In this digital age, Artificial Intelligence (AI) is rapidly gaining ground as a significant player in political strategies,/data analysis, and targeted advertising. AI, with its exceptional capabilities, can analyze vast amounts of data, discern patterns, and make predictions that are invaluable for strategizing and forecasting political trends. Its ability to understand and learn from data has made AI indispensable in the fast-paced environment of politics where swift decision-making is essential.

AI has also changed political communication, engagement, and influence. Machine learning algorithms can now create highly personalized online content tailored to individual users based on their online activities and behavioral trends. This potent combination of data analysis and personalized content is capable of shifting public opinion and influencing voting behavior. AI's capacity to reach larger audiences at a granular level has changed the landscape of political communication, making it a key element in modern-day politics. It's clear that AI's role in politics extends beyond mere data analysis and has profound implications for how political discussions are shaped in an increasingly interconnected world.

Identifying AI-Generated Disinformation

Artificial Intelligence (AI) has arguably introduced several complexities in politics and elections with AI-generated disinformation often employed while disseminating information. Detecting this fake news can be a challenging task, due to its sophisticated and factual appearance. It requires a keen understanding of the software mechanisms involved and a firm grasp of the political contexts and tactics associated with the source of the information. Implementing appropriate tools, keen observation, and comprehensive knowledge about political strategies are the initial steps toward detecting AI-generated disinformation.

The role of "deep fakes", AI-loaded technologies that superimpose existing images and videos onto source images or videos, has substantially generated nuanced yet misleading digital content. Such content may blend imperceptibly with accurate information, making it daunting to distinguish between authentic updates and deceptive content.

Recognizing AI-generated disinformation goes beyond the superficial, demanding an analysis where the frequency, origin, context, and underlying agenda of the information are put under scrutiny. Moreover, the most effective strategies to expose AI-generated disinformation include but are not limited to, detailed fact-checking, using advanced AI detection tools and educating the public on identifying questionable content.

The Role of Deepfakes in Election Disinformation

Deepfakes, increasingly sophisticated artificial intelligence-manipulated videos, pose a significant threat in the realm of election disinformation. Equipped with machine learning technology, this disinformation tool can craft extremely realistic video or audio content, featuring public figures in never-occurring scenarios, delivering words they’ve never said. These AI-assisted fabrications have only recently emerged but have already sent ripples through political campaign strategies, exacerbating the age-old problem of falsehood in politics.

Utilizing deepfakes in election disinformation campaigns is not merely hypothesized, but already in practice. Subtly altered media content has the potential to sway public opinion and create confusion surrounding the facts of political events and personal encounters. This distortion of reality not only undermines the credibility of individual politicians but jeopardizes the integrity of the democratic process. A single deepfake video can plant seeds of doubt, sparking controversy, and division among voters, thereby manipulating the election narrative to benefit those who disseminate disinformation.

Impact of AI-Generated Election Disinformation on Voters

The pernicious sway of AI-generated election disinformation extends beyond the digital sphere, reaching the real world with palpable consequences. Digital falsehoods disseminated by artificial intelligence technologies have a profound impact on voters' political beliefs, choices, and behaviors. By manipulating images, videos, and text, AI can create cognitively persuasive messages that can easily alter voters' perceptions of political candidates or issues. This not only misleads the electorate but also fosters a climate of mistrust and anxiety.

Moreover, the assemblage of AI-generated election disinformation can exacerbate the political divide among voters. Artificial intelligence, armed with data analytics capabilities, can tailor disinformation to specific demographic groups, deepening their entrenched beliefs and fueling political polarization. In addition, such disinformation campaigns can suppress voter turnout by creating disillusionment or confusion about the electoral process. These tactics, unfortunately, can undermine the foundation of democratic societies: the informed vote.

The Proliferation of AI-Generated Disinformation in Social Media

Social media platforms have become the primary conduit for AI-generated disinformation, contributing significantly to the problem's magnification. Due to their sheer reach and easy access, these platforms provide fertile ground for spreading falsified information, enabling it to move faster and wider than ever before. An alarming trend of AI technology, leveraged to create deepfakes and other misleading content, proliferated rapidly during recent election periods. This AI-generated disinformation often targets politically polarized groups and is skillfully designed to exploit existing biases, creating an echo chamber that amplifies its reach and effect.

Artificial intelligence holds tremendous potential; however, its misuse is not to be understated. Its application in generating disinformation particularly raises questions regarding its ethical employment. Tailored algorithms and AI-powered bots dominate social media, capable of disseminating vast amounts of disinformation quickly. Unfortunately, due to the sophistication of such technology, this election disinformation often goes unnoticed and unrecognized by the average social media users, thus exacerbating the impact on voters' decision-making processes and overall perceptions of political events. Crafting strategies to combat the proliferation of AI-generated disinformation necessitates urgent attention from all stakeholders involved.

Strategies for Deciphering AI-Generated Election Disinformation

In this digital age, identifying AI-generated election disinformation has become a crucial skill. This involves developing a keen understanding of the various forms of disinformation, such as deepfakes, text generated by AI, and manipulated multimedia content, among others. Additionally, understanding the contexts in which these forms of disinformation are most likely to manifest is also vital. For instance, during election periods when political mudslinging often escalates, the proliferation of false information tends to increase.

The identification process can be bolstered by utilizing advanced tools designed to assist in the detection and debunking of AI-generated lies. AI and machine learning algorithms have been developed to identify deepfakes, while sophisticated analytical tools can help gauge the authenticity of text content. Engaging with reliable fact-checking organizations and platforms, which have become more prevalent and accessible thanks to the internet, is another strategy that can provide immense help in combating disinformation.

Education plays a key role in this aspect; awareness about AI-generated election disinformation should be widespread, allowing the public to participate in the debriefing process consciously. Awareness also brings demand, urging technology companies, social media platforms, and governing bodies to continually develop and upgrade tools that can quickly and effectively identify AI-generated falsehoods.

Necessary Tools for Detecting AI-Generated Disinformation

In addressing the menace of AI-generated disinformation, it becomes essential to examine a range of tools designed for its detection. These detection tools are proficient in distinguishing between natural human communications and artificial content, embodying the crux of AI technology's prowess. They include both software solutions and innovative technological developments that use machine learning algorithms to scrutinize online data and differentiate deceptive content from genuine information.

Advanced tools such as reverse image search engines and video forensics empower individuals to verify the authenticity of visual content, a typical medium for AI disinformation tactics. Furthermore, text-based analysis tools can evaluate the linguistic patterns peculiar to AI-generated text, identifying uncanny repetitions and unnatural phrasing frequently associated with AI-generated content. Thus, these powerful tools can act as essential safeguards in the continuously escalating battle against AI-generated election disinformation.

- Reverse Image Search Engines: These tools allow users to upload an image and find its origins or other instances of its use online. By doing so, they can verify whether the image has been doctored or manipulated. This is particularly useful for debunking AI-generated images that may be used to spread disinformation.

- Video Forensics: Advances in technology have made it possible to create convincing deep fakes - videos where a person's face or voice is replaced by another's. Video forensic tools can analyze these videos at a pixel level, identifying inconsistencies and signs of manipulation that might not be visible to the naked eye.

- Text-based Analysis Tools: These software solutions scrutinize written content for patterns characteristic of AI-generated text. They can detect unusual repetitions, unnatural phrasing, and other anomalies often found in machine-produced content.

- Machine Learning Algorithms: Leveraging the power of artificial intelligence, these algorithms are trained on vast datasets consisting of genuine and deceptive content. They learn how to differentiate between the two based on subtle differences in style, tone, structure, etc., making them efficient weapons against AI-generated disinformation.

- Social Media Monitoring Tools: Since social media platforms are hotbeds for spreading disinformation, monitoring tools designed specifically for these platforms play a crucial role. They track trends and detect suspicious activity patterns indicative of coordinated disinformation campaigns.

In conclusion, while there is no foolproof method yet available for detecting all forms of AI-generated disinformation due to the rapidly evolving nature of this threat; having access to sophisticated detection tools certainly enhances our ability to identify and counteract such malicious activities effectively.

The Role of Fact-Checking in Countering AI-Generated Disinformation

Because of its pivotal position in truthful narrative preservation, fact-checking plays a crucial role in curbing the influx of AI-generated disinformation during electoral proceedings. Fact-checkers utilize various tools, resources, and expertise to dissect and analyze news elements, scrutinizing their authenticity. They meticulously delve deep into the origins of the information, defining the chronology of events, cross-checking facts from multiple trustworthy sources, and importantly, using specialized AI detection tools, optimizing the discernment process of false narratives.

Considering AI-generated disinformation as a daunting guise, fact-checkers are now specifically trained to identify signs of artificial intelligence manipulation within content. Continuous technological advancements have expanded the investigative toolbox, with tools capable of detecting speech patterns, facial inconsistencies, and textual quirks specific to AI algorithms. These cogent methods of fact-checking not only provide a bulwark against misleading content preying on the vulnerabilities of the uninformed electorate but also uphold the sanctity of truth in public discourse. Hence, the role of fact-checking is indispensable in countering AI-generated election disinformation.

Educating the Public about AI-Generated Election Disinformation

In recent years, the need for public education about AI-generated election disinformation has become increasingly vital. Misinformation targeted towards uninformed citizens can drastically influence their political perception and voting decisions. This digital fabrication, often powered by artificial intelligence, exploits people's trust in shared content and their lack of ability to distinguish what is real from what is fake. By making the public aware of the tools, techniques, and intelligence behind disinformation, vulnerabilities to such manipulative content could be significantly reduced. Besides, mindfulness about misleading tactics provides a defense against AI-powered election disinformation.

AI-generated deepfakes and misinformation potentially distort democracies by manipulating the electorate's view about aspiring leaders. Therefore, it becomes incumbent to teach individuals how to distinguish genuine information from deliberately manipulated narratives. Providing the public with the knowledge and skills to identify deepfakes and debunking false data should be a priority for organizations involved in digital literacy. This could potentially equip the citizens with critical thinking skills to decipher disinformation and ensure the sustenance of democracy. Furthermore, regular awareness campaigns about the dangers of AI-generated election disinformation must be an integral part of a comprehensive public education strategy.

The Responsibility of Social Media Platforms in Handling AI-Generated Disinformation

With the advent of AI-generated disinformation, notably during election cycles, the importance of social media platforms proactively addressing this issue cannot be understated. These platforms carry a crucial role in information dissemination, leading to a significant impact on public opinion. Therefore, engaging in strategies to detect and counteract AI-generated disinformation signifies a critical responsibility they ought to undertake.

Efforts should include bolstering their AI-powered detection systems for identifying deepfakes and other forms of disinformation. By integrating advanced algorithms that can recognize and flag potential disinformation, the proliferation of deceptive content could be dramatically restrained. Moreover, working with fact-checkers and other external parties working towards the same end could enhance their capabilities. Social media platforms inevitably have a huge task at hand in handling AI-generated disinformation, yet adhering to these responsibilities is paramount to preserve the integrity of information during crucial periods such as elections.

Government Measures Against AI-Generated Election Disinformation

Given the pervasive threat that AI-generated election Disinformation poses to the integrity of the democratic process, it is crucial that government authorities take proactive measures for its prevention and detection. One such step involves the development and implementation of advanced technological tools able to detect deepfakes and other forms of fabricated information. This implementation extends not only to election monitoring mechanisms but also to the cybersecurity infrastructure of political institutions, making it harder for malignant actors to dispense misleading content.

Additionally, legislative measures have been introduced to criminalize the malicious usage of AI-generated disinformation. These laws deter potential perpetrators and give authorities the power to penalize those who compromise the veracity of electoral processes. In concert with this, the government is also employing educational campaigns to raise public awareness about AI-generated electoral disinformation, enhancing citizens' capability to identify and report any suspicious content they may encounter. These measures, among others, underline the government's commitment to preserving fair and free elections, undistorted by the insidious influence of artificial intelligence-generated disinformation.

Future Perspectives: Preventing AI-Generated Election Disinformation

As artificial intelligence technology continues to evolve, so too must the strategies and tactics designed to combat election disinformation. Central to this is the development and promotion of tools capable of not just detecting but also preventing the spread of AI-generated disinformation. These tools could be engineered to identify and flag deepfakes, misleading content, and other deceptive digital material before reaching the voting populace. Technologies such as blockchain, that enhance transparency and traceability of information, could also play a critical role in addressing this issue.

Governments, tech companies, and citizens have a shared responsibility to mitigate the impact of AI-generated election disinformation. Comprehensive governmental regulations that mandate the veracity of online political advertisements could serve as one deterrent. Tech companies hosting these platforms should also proactively monitor and introduce stringent measures to sieve and quarantine disinformation. Additionally, fostering an informed public that is capable of critically evaluating online content is essential. This could be achieved through large-scale public awareness campaigns on media literacy, deepfakes, and the overall impact of AI-generated disinformation on democratic processes.

What is AI-generated election disinformation?

AI-generated election disinformation refers to false or misleading information related to election issues that are created and propagated by artificial intelligence tools or systems.

How significant is the role of artificial intelligence in politics?

The role of artificial intelligence in politics is significant and growing. AI can analyze data, predict voter behavior, and even create targeted campaigns. However, one of its more concerning uses is in the creation and spread of disinformation, particularly during election periods.

What are deepfakes and how do they contribute to election disinformation?

Deepfakes are highly realistic and manipulated video or audio materials produced by AI technologies. They can be used to create false narratives or misleading representations of politicians or voters, contributing significantly to election disinformation.

What impact does AI-generated election disinformation have on voters?

AI-generated election disinformation can mislead voters, manipulate their opinions and choices, and undermine their confidence in the electoral process. This can have serious implications for democracy and the legitimacy of election outcomes.

How do social media platforms contribute to the proliferation of AI-generated disinformation?

Due to their vast reach and the speed at which information can spread, social media platforms can inadvertently become conduits for AI-generated disinformation. Misinformation can go viral quickly, reaching large audiences before it can be identified as false or misleading.

What are some strategies for detecting AI-generated disinformation?

Strategies for detecting AI-generated disinformation include using machine learning algorithms to identify patterns characteristic of AI-generated content, scrutinizing sources, and cross-referencing information with trusted news outlets.

What is the role of fact-checking in countering AI-generated disinformation?

Fact-checking is critical in countering AI-generated disinformation. By verifying information and debunking false claims, fact-checkers can help prevent the spread of disinformation and ensure the public is well-informed.

How can the public be educated about AI-generated election disinformation?

Public education can include awareness campaigns about the nature and risks of AI-generated disinformation, teaching critical media literacy skills, and promoting responsible information-sharing practices.

What responsibility do social media platforms have in handling AI-generated disinformation?

Social media platforms have a responsibility to monitor and control the spread of disinformation on their platforms. This can include implementing rigorous content moderation policies, developing technologies to detect and remove AI-generated content, and collaborating with fact-checkers.

What measures can governments take against AI-generated election disinformation?

Governments can introduce legislation to penalize the creation and spread of disinformation, invest in technology to detect AI-generated content and cooperate with social media platforms to control the spread of false information.

What are the future perspectives on preventing AI-generated election disinformation?

Future perspectives on preventing AI-generated election disinformation include advancements in AI detection technologies, greater collaboration between governments, technology companies, and fact-checkers, and introducing strict regulations and penalties for the creation and dissemination of disinformation.